The Shape of AI

We are only just beginning to see the form that this new technology is taking in our products and our experiences. While we develop the patterns to design for it and with it intelligently, we must also be aware of how it is shaping us in return.

A paradigm shift

If you are reading this, chances are you think about software, or digital experiences, or content a lot. Maybe you’re a designer, or an engineer, or a content writer. Maybe you work for a company that designs or builds. Maybe you’re a founder.

No matter who you are, you’re probably noticing that things are changing, fast.

And yet, not as fast as you might expect.

We are seeing new features using AI pop up, but they mostly feel the same right now. Chatbots that you can direct, summaries of long articles or transcripts, pictures that look cool but maybe feel a little off…

Even the companies that are popping up in this space seems like they are solving similar problems, or solving different problems similarly.

Either AI is totally overhyped or we are standing of the edge of what is sure to be a very big cliff, with no idea of how deep it goes.

I think there’s no way it’s the former.

But I also don’t know what’s over the edge. I don’t know how much this change is going to effect our daily lives, our jobs, our relationship to technology, and the role technology plays in the products and experiences we interact with constantly.

I’m paying attention though.

Slow at first and then all at once

Even if technology feels overwhelming at times, up until now, and for the most part, we have felt in control. We can blame bots, and big tech, and bad actors, and even “the algorithm” for the things we don’t like, but until recently I rarely talked to someone who felt like they truly didn’t know what their computer was doing.

That started to shift a bit as voice devices like Alexa appeared, raising fears of ever-listening machines. Waves of misinformation in recent political cycles raised the alarm.

But then in the last 6 months or so it seems like all hell has broken loose.

Is that post written by a human or ChatGPT? Is that photo of your house real? Did that politician really say that, or did that celebrity really endorse that product? Will I be laid off tomorrow when a computer takes my job?

Can we control any of this?

Now I’m hearing questions like this daily. I don’t have the answers to them, but we can find clues to those answers in the design of the products and experiences we interact with.

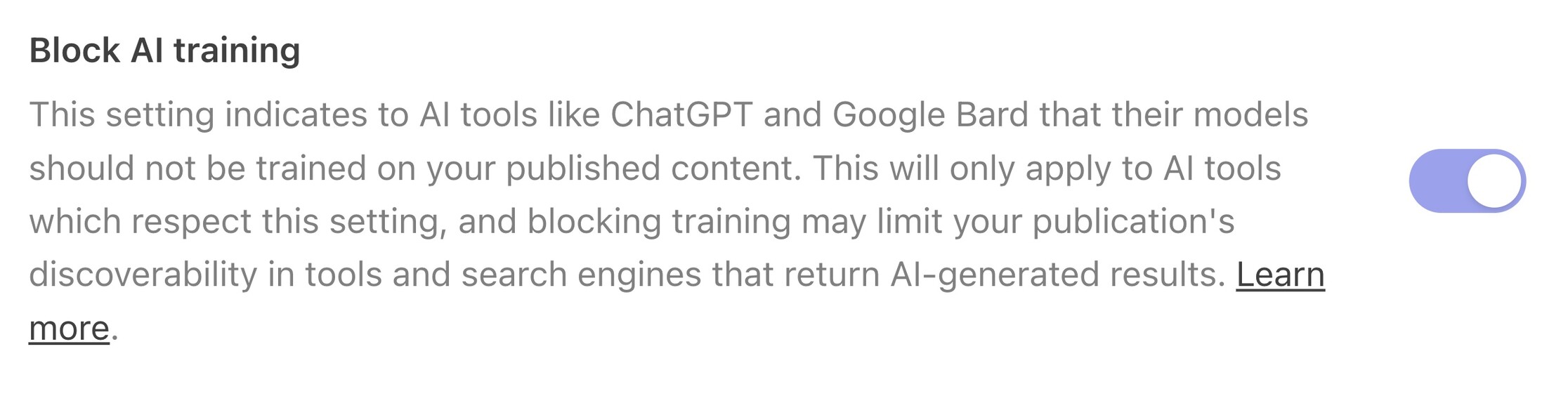

Take this platform where I published my newsletters. Hidden deep in the settings, half a page down, turned off by default, is this option:

Think about the implication of that. Substack is incentivized to take my words, feed them (probably for a kickback) into the model of large tech companies that will sell it back to other consumers, reproduced as thoughts that those people can post on their newsletter.

Without crediting me, without verifying if what I am writing is true.

Alright, cry me a river, so someone could steal your newsletter. Sounds innocuous, if not innocent…

But what if that was a photo of you instead, hidden deep in Instagram’s settings. What if it was your child’s voice, borrowed from voicemails they left you. What if it was your life’s work?

Small design choices will have massive impacts on our sense of personal ownership, our trust, and our relationships to each other – relationships increasingly shaped by the content and experiences we share online.

Form and function

This is what I’m curious about, and what I’m going to share here.

I’m a designer. My profession is to understand how people interact with each other, products, organizations, and institutions, and to nudge behavior through content and software to make those interactions better.

Hopefully, better for the person using them, not just for the company, institution, what have you.

As computation (AI) changes these relationships, these interfaces and interactions will change too. Our design needs to change with it. What we design, how we design, where we design, who we design with.

And who designs. Or, without getting to the “everyone is a designer” debate, let me restate this: Who takes ownership of the end experience. Who influences the end experience in an intentional way. Prompt engineers, model builders, business operators, and more will all have an impact on how this technology affects people, and what incentives or loops are built into the products they interact with.

You may be familiar with the phrase “form follows function.” In non jargon, this means the way something works should be based on what I want to do with it.

Right now form is driving function. We are in the early stages of this technology impacting our world. We don’t know enough about it to push yet back.

But we’re starting…

Future topics

I chose the title of this blog intentionally. The I’m going to try and balance the content between patterns in the wild, patterns of work and experience, and the occasional deep theoretical posts (which I’ll try to minimize)

Some of the topics I plan to write on include

- Emerging visual and interaction patterns in AI products

- How it’s shaping how we work

- The behavioral and societal impact of this technology entering our lives

- How we can lead teams and organizations through these changes

- How we need to shape ourselves with new skills and mindsets

Send me note if you have other ideas, and please subscribe to follow the newsletter. Better yet, share it. The bigger this community is, the faster we can move together